Trusting in the Age of AI

How will you know who wins next year’s election? It’s time to take The Sniff Test.

It’s important to have people we trust in our lives. Many of us trust celebrities and influencers and surrender our privacy for whatever they’re promoting this week. A study in Psychological Science suggests we trust celebrities to make decisions on our behalf more than family members. Occasionally, like Kayne, they let us down.

Earlier this month it was announced that image generation by artificial intelligence creates faces that are more realistic than photos of the person. These may be made into videos and other media content and used to sell us something. Most of our communication is body language and speech tone, rather than the words we say. Given that, it would be easy to convince us that someone is saying something unusual or outrageous, provided it looked, sounded and acted like them.

Microsoft’s Pound of Flesh

This week’s business news has been dominated by the story of Sam Altman’s ousting from OpenAI and the machinations to bring him back. The media narrative is that the board members of the non-profit organisation which controls OpenAI, who don’t own shares in the business and are tasked with protecting humanity, decided that Altman was a loose cannon whom humanity couldn’t trust.

Altman has been vocal for years that humanity did not mean a group of people in San Francisco deciding what was right for the world. His alternative seems to be flying around trying to raise money from whomever he could. That might mean folks who are unsavoury to the good people of San Francisco.

Altman also claims that development of AI is taking baby steps compared to what it will do, but a new paradigm is required before artificial general intelligence emerges. This refers to the leap in the theory of knowledge required for us to code intelligence creation into machines, which I discussed last week.

While the world debates who is the righteous guardian of our fate, and finance types remind us that money talks and you don’t take billions from Microsoft and not expect to pay at least a pound of flesh, the AI that OpenAI unleashed on the world is already in a position to cause chaos.

2024 - The Year of Elections

There are national elections next year in the US, the UK, India, Taiwan, Ukraine, Russia, 15 African countries, 13 in Europe plus the European parliament, six more nations in each of Asia and the Americas, and in Palou. Some are more predictable than others but all carry the risk of disinformation spread by artificial intelligence tools.

Researchers at University College London found that humans are more likely to accept white faces generated by AI as real than photographs. The finding did not hold for other ethnicities, although it’s a matter of time.

This result is similar to the Turing Test, which is popularised as a competition to see whether chatbots can fool humans and is therefore a test for human fallibility rather than machine intelligence. By reproducing faces that humans perceive to be lifelike, AI is able to deceive us without reaching what we would consider to be general intelligence.

This is a more immediate threat to society than the machine that enslaves us and it is coming to an election near you shortly.

The Exponential Age

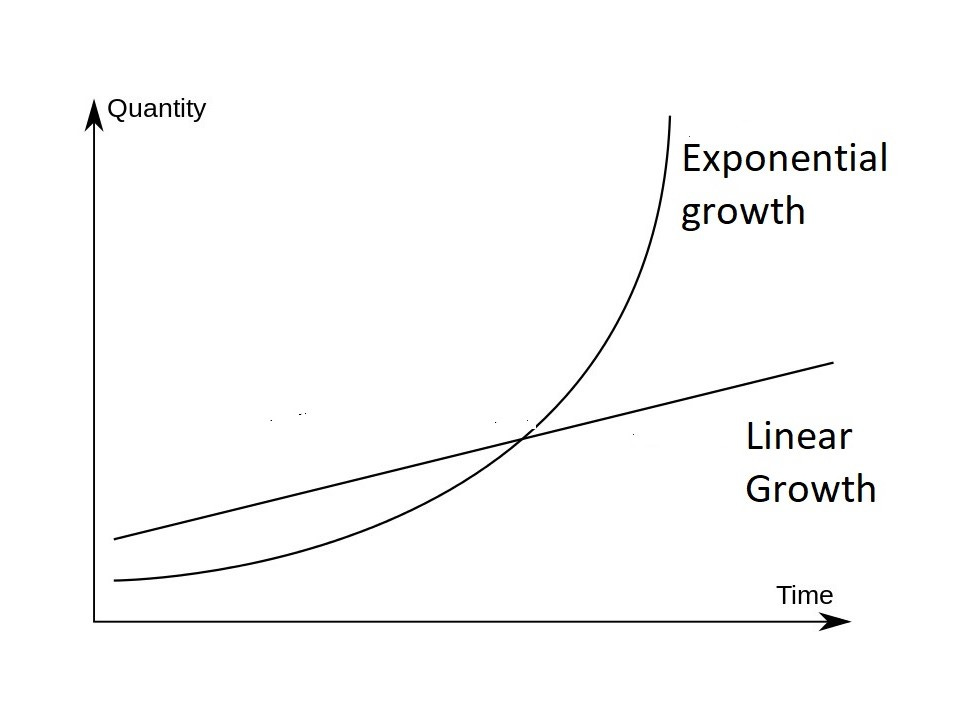

Why does technology develop exponentially? This means it doubles over a predictable period of time, and while at first improvements are imperceptible, by the time most people notice they are unstoppable. The billionaire whose wealth grew exponentially had made ‘only’ $500 million in their career up until last year.

This phenomenon has upturned the financial world. Investors of a certain age were taught that growth fluctuated around a upward sloping line and would mean revert once it deviated too far above or below trend. Exponential systems do not mean revert.

Value investors like to look back over a hundred years or more to show cycles and nod sagely about the eventual return to trend. They cite the wisdom of Benjamin Graham and his student Warren Buffett, which is 70 years old. The transistor age was just starting with Shockley’s invention of the junction transistor, which came 74 years after the invention of the AC-DC converter in 1874. It was a different era.

In the exponential theory of technology there are four layers of development. The foundational layer houses the infrastructure for technology, which is energy, data and processing power. The productivity layer combines these factors and delivers value to the digital layer, in the form of currencies, payment systems, verification and a host of other applications. The final layer is the human layer where we get life enhancing results in the form of wearables, breakthroughs in fighting disease and longer lifespans.

It was assumed that intelligence, which is the transformation of data into information, sat in the productivity layer but that may not be the case. When data is automatically and cheaply transformed into information in the blink of an eye, intelligence is foundational. Access to intelligence becomes a human right and it is artificial intelligence that makes it available to the wider world.

In this case we won’t question the intelligence. Scientists and journalists like to say check your sources, but when you don’t know how the machine came up with the intelligence, what does that mean. As machines combine increasing amounts of data into patterns that humans cannot perceive, information as intelligence is presented to us as fact and we accept it.

Even if we could check machine thinking, the volume of data makes this impractical. The chart shows the predicted amount of data out to 2035. We are merely in the foothills today.

Where is this data coming from? In small part it’s from our online activity, posting, sharing photos and a million newsletters like this one where once there were a few hundred newspapers. Then the world goes digital as an online replica is created for everything we have in the real world. But the big boost to data is the constant interaction of connected applications; the internet of things.

Consider wearable technology, such as an Apple watch or Levels health monitor. It records data about you constantly and this data is stored permanently and analysed by corporate algorithms. Partly this is for your benefit, such as showing your blood pressure or glucose level, but who knows what else the machine discovers in the data. Disease, genetic weakness or an inability to form lasting relationships? No one knows.

We Don’t Check the Data

Humans make around 35,000 decisions a day. The vast majority are automatic, governed by rules of thumb that prevent us thinking too much. These rules trigger emotional responses and are easily manipulated.

There are almost 200 cognitive biases that have been named as ways in which we take a short cut around thinking to deliver an instant reaction. Examples include confirmation bias, whereby we are drawn to information that supports what we already believe, and the halo effect, where we look favourably on people because they are tall, handsome or famous. This is how celebrities get us to surrender our data to crypto scams and Chinese social media apps that store our data forever and for unknown purposes.

Occasionally we stop and think about things and apply logic to overcome our emotions. We weigh the odds, review the data and make a thoughtful decision. Generally we call this work and it is taxing, so the brain that is 2% of our bodyweight consumes 20% of our calorific intake.

But when the machines are serving up intelligence derived from data through patterns that we cannot perceive, and doing it in such volume that the intelligence is everywhere, how will we use our logical brain to question this?

If a face looks to be human then no amount of staring at it is going to alter that impression. We can contemplate our existence like the 17th century Descartes, but he was forced to conclude that he knew he was real because he was thinking. When the machines are thinking for us we will accept it is real.

It’s important to stress that none of this depends on artificial general intelligence. All it requires is algorithms that process quantities of data far beyond human capability and spot patterns we cannot conceive. That is what AI does today and if your job involves checking the data, it’s time to look for another.

Next November Nightmare

It’s a rainy November evening on the first Tuesday of the month, 2024. You stay up to watch the results of another tight election. Joe Bidden pops up on social media conceding and congratulating Donald Trump on becoming the 47th President of the United States and only the second non-consecutive two term president after Grover Cleveland.

Fox News declares for Trump. CNN rebuts this noting uncounted postal votes swing Democrat and disputing Biden’s concession. Trump supporters who have gathered in the capital prepare to march to the White House.

Suddenly Charles Q. Brown Jr. is all over the screens declaring the shoot-on-sight policy for unauthorised personnel within a 50 yard radius of government buildings. No one knows what unauthorised means and does this apply to commuters and tourists? But wait, what’s that – it’s you in the crowd heading down Pennsylvania Avenue.

While you know you’re at home, do your family and friends believe you? On screen there are trusted political and media figures and they look and sound authentic. When Biden emerges to deny conceding, which Biden do you believe? Here’s technologist Peter Diamandis talking to his AI generated self - which one is real?

A Million Times More Powerful

Jenson Huang, CEO of Nvidia, the chip manufacturer at the heart of the AI boom, predicts that models will be one million times more powerful than ChatGPT within 10 years. This will mean that information is the preserve of the machines and we will focus on the value and human layers to determine whether intelligence is good or bad. The ends will justify the means.

Jeremy Bentham devised such a system in the 18th century. Act utilitarianism evaluates any action based on its consequences. Bentham argued that judgement was based on the amount of pain and happiness an action caused, sometimes referred to as the greatest good for the greatest number. It’s a system that potentially allows the majority to persecute a minority.

Bad data leading to bad outcomes has always been a concern with AI learning from the well of human knowledge. When the well is polluted then machine intelligence will be too. Racism in selecting job candidates and prejudice in choosing university students are enshrined by copying what has gone before.

In our near future the AI does what is best for most of us based on analysing the data from all of us. We don’t know how it decides this.

But before we reach this science fiction scenario we must navigate next year, when humans will use existing AI to create fake news and undermine trust in elections. Democracy will be on the way out if not enough people believe the results.

Of Blockchains and AI

One of the consequences of the surge in interest in artificial intelligence is to side-line news of blockchains and cryptocurrencies. The US Securities and Exchange Commission has taken out most of the bad actors and bitcoin is having a good year, up over 120% to date. The concern for crypto is that it will not develop big enough real world applications, which is the precise opposite concern that we have for artificial intelligence.

There are ways that blockchain and AI combine. AI will need to buy elements of the fundamental layer, such as energy, data and processing power, and a trust less authentication protocol such as Bitcoin may be the preferred way to do this. After all, why would machines trust humans to be in charge of a currency.

And in a world where AI can replicate us in social media posts far faster than we can respond, we will need a way to authenticate ourselves online. This is what blockchain is – a rules-based digital signature without which nothing may be done in our name. The challenge for humans is to come up with a way of making access to the signature easy-to-use without allowing machines to copy it.

I vote we don’t use facial recognition.